ADVERTISEMENT

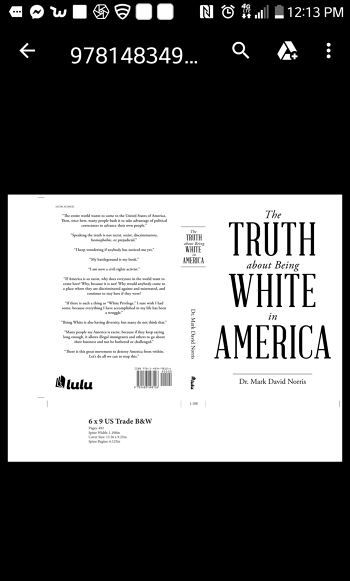

The Truth About Being White in America

Dr. Mark David Norris , author

The Truth About Being White in America exposes many of the lies that have been generated towards white people in america since the 1960's. I address all of the major constructs such as education, the media, our government, sports, the military, etc. I look at this issue from a positive standpoint, rather than the negative one that seems to be always generated by many liberal democrats and many minority organizations. The book is an eye opening book and one that the entire nation must read. No longer can liberals demonize and vilify white Americans after this book has been read.